This is the first of a two-part manifesto on why solar will power the age of artificial intelligence.

—

AI and solar are both scaling exponentially, and they need each other more than anyone realizes.

Solar + storage can power AI.

“But AI runs 24/7 and solar is intermittent—you can’t run a data center on sunlight.”

We’ve all heard it a thousand times. But it’s missing the point.

The physics works.

The economics work.

What doesn't work is trying to build AGI on 20th century energy infrastructure.

If you really want baseload solar, here’s a simple recipe: Overbuild PV capacity by 5x or more, add tens of hours of battery storage, and voila—24/7 solar.

Exhibit A: Masdar.

The UAE's state-owned renewable energy company is building the world's first gigawatt-scale solar plant delivering round-the-clock power. 5GW PV + 19GWh storage = 1GW of continuous power.

It costs ~$6/W in Abu Dhabi today—expensive, but still less than 10% of what an AI data center costs (~$50/W capex).

And while overbuilding PV may sound land-intensive, the actual land needed is surprisingly modest. A 100MW data center covers ~100 acres. Powering it 24/7 with solar would take 2,500 acres, or 4 square miles. That's smaller than a single airport. High-efficiency perovskite tandems could then cut that by 30–50%. And solar doesn’t need to be next door—just on the same grid.

Baseload solar is coming—but AI doesn’t need to wait for it.

AI only needs massive amounts of new baseload power if you assume two things: flat inflexible load and capacity-limited grids. Both assumptions are fading.

The winning formula is a diverse generation mix: ~90% solar, wind, and storage—with gas, geothermal, and nuclear filling the gaps. Flexible loads and grid diversity already make solar the cheapest incremental power for most new data centers. Soon it will be the obvious choice.

Solar won’t be the only energy source powering AI, but it will be the dominant one.

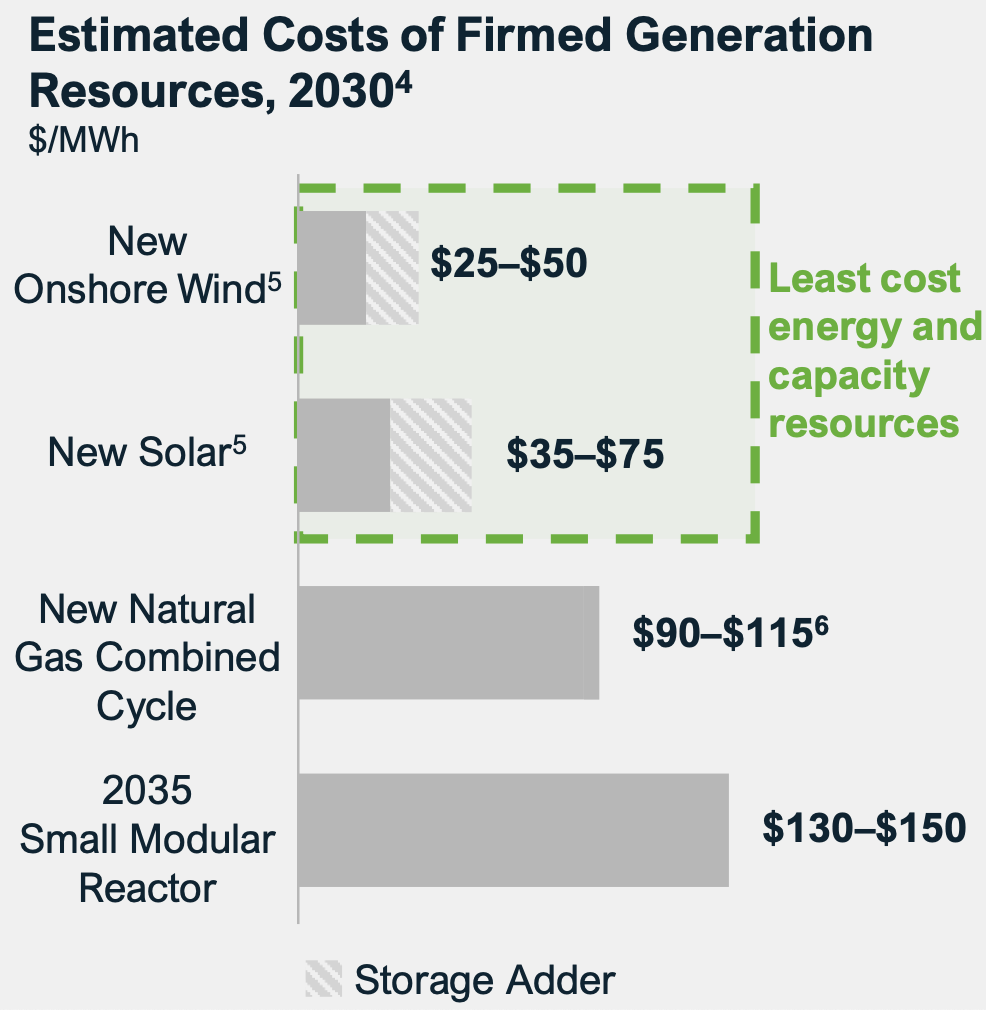

Firm solar electricity—PV plus batteries or gas peakers to guarantee output at times of maximum capacity need—costs ~$100/MWh according to Lazard. That’s on par with gas combined-cycle plants.

But the curves are moving in opposite directions.

Solar and battery costs are falling 20% with every doubling of production.

Utility-scale PV LCOE is dropping even faster. While silicon PV is approaching its efficiency ceiling, perovskite tandem technology extends the runway for efficiency gains and cost reductions for decades. Higher efficiency means fewer panels, less racking, and smaller footprints—cascading 20%+ cost reductions across the system.

Gas turbines are heading the other way.

Bloomberg reports over $400B of planned gas plants at risk because combined-cycle turbine manufacturers can’t keep up. And investors looking to expand turbine capacity need to think twice. Gas will remain an important backup resource—but that’s exactly the problem for new investment. When solar and storage are riding down an exponential learning curve, a flat or rising gas cost curve looks more like an insurance policy and less like a growth bet.

Three reasons—all temporary.

Behind-the-meter solar is starting to bypass the slowest part of interconnection: transmission approval. This isn’t a simple fix for the entire interconnection queue. But every project that generates power where it’s consumed lightens the load on an overstretched grid while accelerating deployment.

As costs keep falling and solar-first data centers prove reliable, these barriers will evaporate.

NextEra has 8.8GW of solar projects lined up to serve data centers and other growing loads by 2027.

Google is building industrial parks powered by gigawatts of renewables—solar, wind, and storage running side by side with servers.

Why? Solar deploys faster than anything else—12–18 months versus 3–5+ years for gas, wind, or nuclear.

The AI infrastructure world is trending toward co-located and behind-the-meter renewables. Hyperscalers expect over a quarter of data centers to be fully powered by onsite generation by 2030.

Some will go even further—off-grid solar campuses with storage and gas backup. No interconnection queues. No transmission bottlenecks. No utility markup. One analysis found such systems could meet 90% of lifetime energy demand from solar for $109/MWh—cheaper than restarting Three Mile Island.

AI doesn’t need miracle energy technology. It needs energy that scales.

And nothing scales like solar.

—

Solar isn't just competitive—it's the only energy source that can scale fast enough and far enough to match the exponential growth of AI.

Stay tuned for Part II: Why solar PV will be the foundation of the AI age.

—

Thanks to Patrick Brown, Jon Lin, Dave Bloom, Nasim Sahraei, and Miles Barr for suggestions and for reading drafts!